Global Real-Time Sign Language Avatar Market Size, Share Analysis By Technology Type (AI-driven Sign Language Avatars, Motion Capture-based Sign Language Avatars, Hybrid Systems), By Deployment Mode (Cloud-based, On-premises), By End-Use Industry (Healthcare, Education, Consumer Electronics, Media and Entertainment, Others), By Region and Companies - Industry Segment Outlook, Market Assessment, Competition Scenario, Trends and Forecast 2025-2034

- Published date: August 2025

- Report ID: 155503

- Number of Pages: 257

- Format:

-

keyboard_arrow_up

Quick Navigation

Report Overview

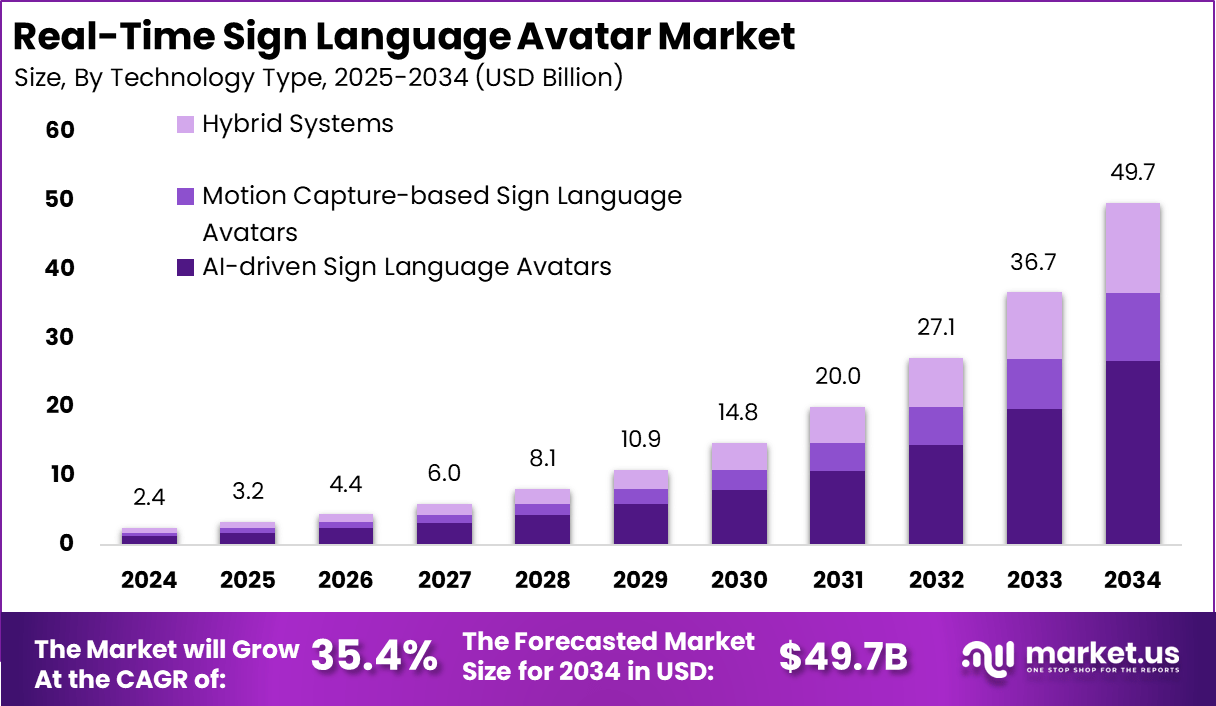

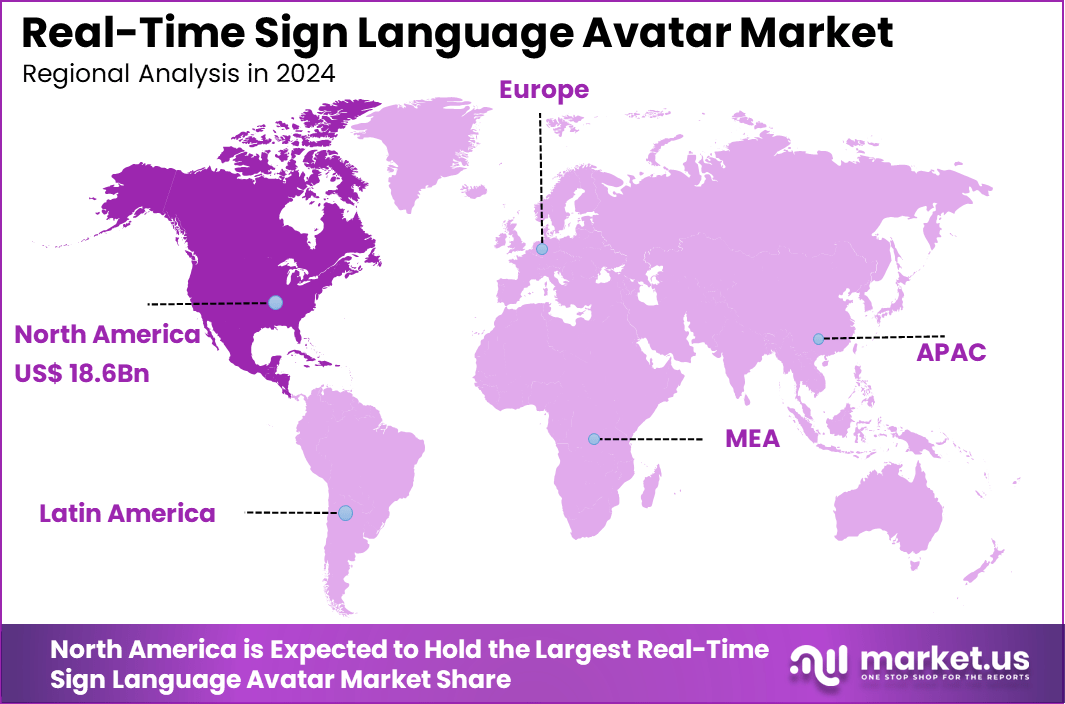

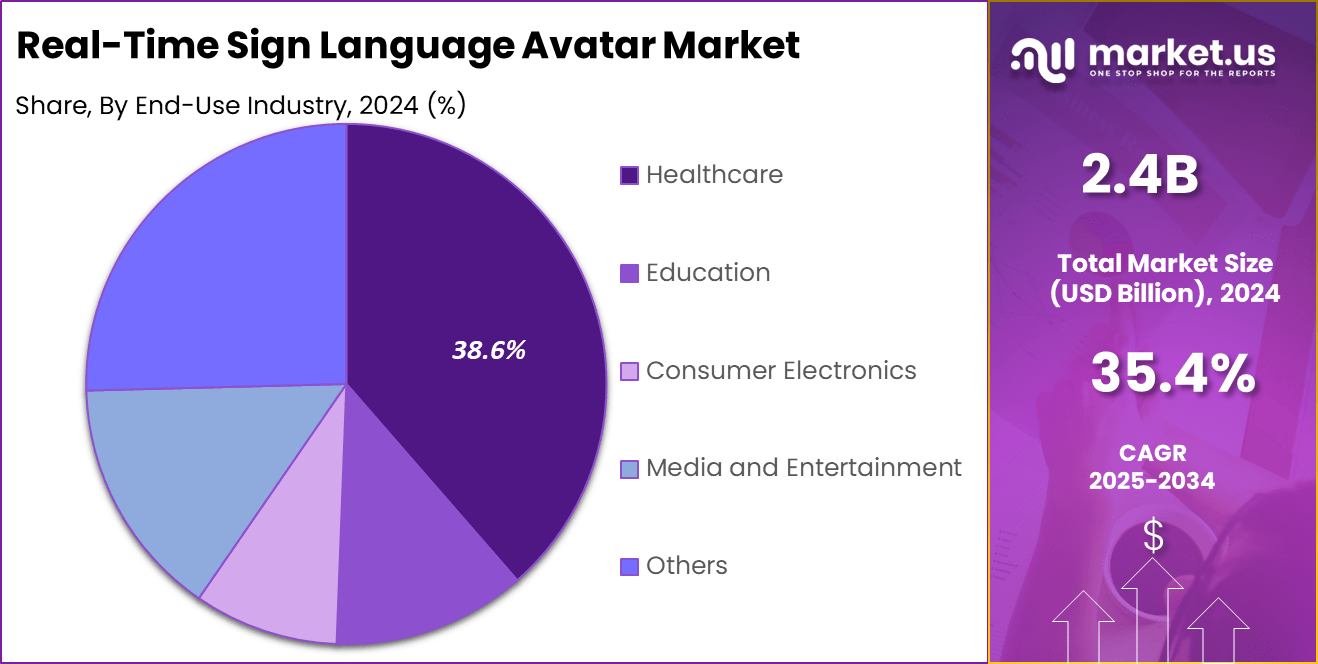

The Global Real-Time Sign Language Avatar Market size is expected to be worth around USD 49.7 Billion By 2034, from USD 2.4 billion in 2024, growing at a CAGR of 35.4% during the forecast period from 2025 to 2034. In 2024, North America held a dominan market position, capturing more than a 37.5% share, holding USD 18.6 Billion revenue.

Key Insight Summary

- AI-driven sign language avatars led by technology type with 53.7%, reflecting preference for accurate, adaptive interpretation powered by machine learning.

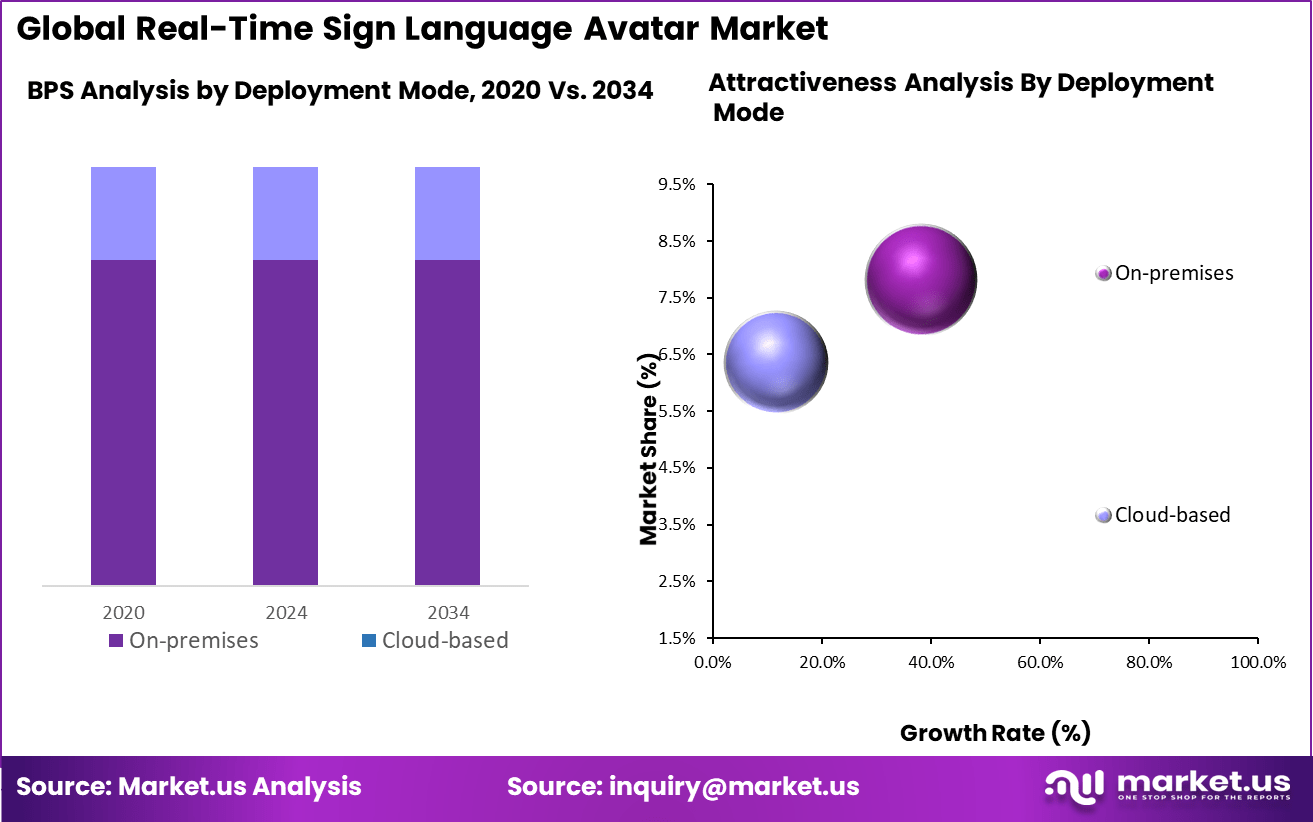

- On-premises deployment dominated with 68.9%, indicating demand for data control, latency reduction, and compliance in sensitive environments.

- Healthcare was the leading end-use industry at 38.6%, driven by accessibility mandates, patient engagement, and clinical communication needs.

- North America accounted for 37.5% of global share, supported by strong health systems and accessibility regulations.

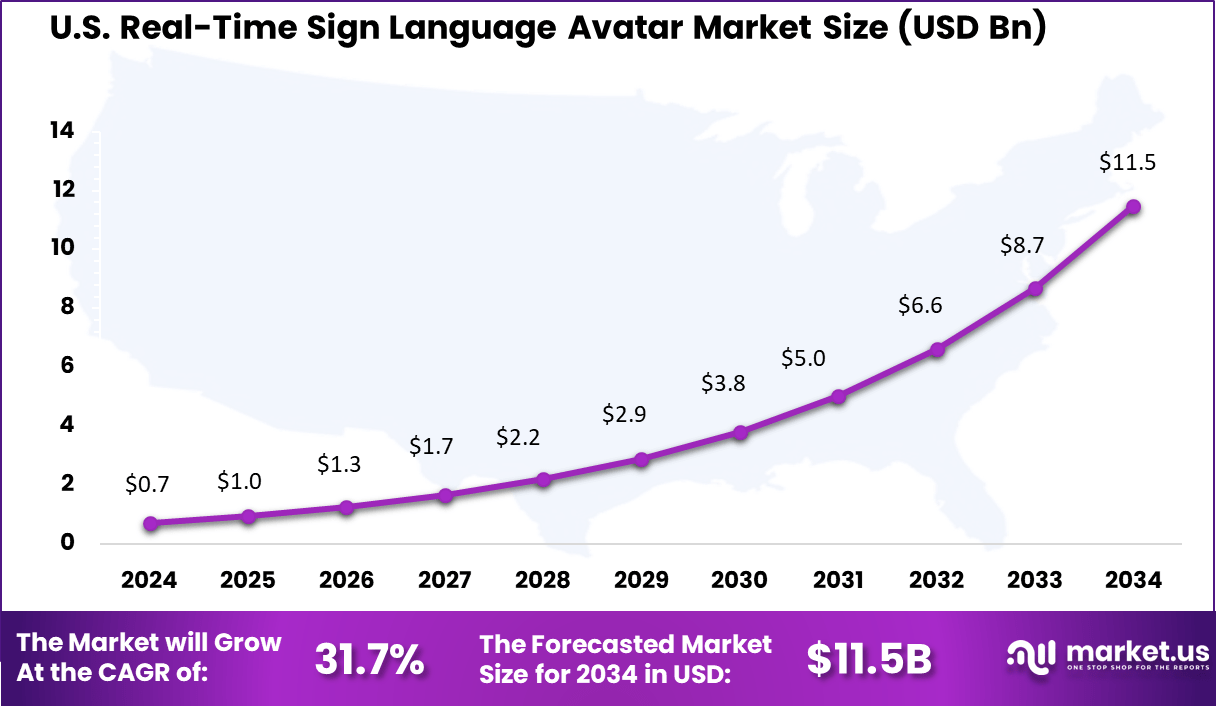

- The U.S. market reached USD 0.73 billion and is projected to grow at a CAGR of 31.7%, signaling rapid enterprise and healthcare adoption.

The real‑time sign language avatar market refers to solutions that transform speech or text into animated sign language renditions through AI‑driven avatars. These solutions aim to enhance communication accessibility for deaf and hard‑of‑hearing communities by providing visual, expressive, and contextually aware translations. The technology spans software for AI translation, hardware such as sensors and cameras, and supporting services for customization and deployment.

Key driving factors in the growth of this market include the rapid advancements in artificial intelligence, machine learning, and computer vision technologies. These innovations enable the development of more accurate, realistic, and responsive sign language avatars that can capture nuanced gestures and expressions. Furthermore, the increasing adoption of digital communication platforms and demand for real-time accessibility solutions have elevated the need for seamless and instant sign language interpretation.

Based on data from gitnux, approximately 2–3 million Americans actively use sign language, highlighting its crucial role in daily communication for the deaf and hard-of-hearing community. Globally, over 300 distinct sign languages are in use, reflecting a rich diversity of cultural and linguistic expression across regions.

It is observed that about 54% of deaf children are born to hearing parents who may not know sign language, which can create early communication gaps and affect language development. In contrast, around 20% of deaf children worldwide are born to parents who already use sign language, enabling stronger early bonding and improved learning outcome

Role of AI

Role/Function Description AI-Powered Translation Use of generative AI and foundation models to convert speech or text into expressive sign language avatar animations in real time. Natural Language Processing (NLP) AI interprets spoken or written language context and semantics for accurate sign language generation. Computer Vision and Gesture Animation Advanced AI enables realistic avatar hand, facial, and body movements mimicking authentic sign language gestures. Real-Time Processing Low-latency AI systems allow instantaneous translation supporting live conversations, broadcasts, and customer interactions. Accessibility & Inclusivity Enhancement AI avatars break down communication barriers for deaf communities, enabling broader inclusion in public, social, and professional settings. US Market Size

The U.S. Real-Time Sign Language Avatar market was valued at USD 0.7 billion in 2024 and is projected to reach approximately USD 11.5 billion by 2034, expanding at a robust compound annual growth rate (CAGR) of 31.7% between 2025 and 2034.

This strong growth trajectory is driven by rising demand for accessible digital communication tools, accelerated adoption of AI-powered interpretation technologies, and the enforcement of stringent accessibility regulations.

Increasing investment in inclusive solutions across education, healthcare, corporate communication, and public services is expected to further solidify the U.S. as a global leader in the real-time sign language avatar market over the coming decade.

In 2024, North America held a dominant market position, capturing more than 37.5% of the global Real-Time Sign Language Avatar market and generating approximately USD 18.6 billion in revenue. The region’s leadership is strongly attributed to its advanced digital infrastructure, early adoption of AI-driven communication solutions, and significant investment in accessibility technologies.

High awareness of inclusivity standards, combined with stringent regulations such as the Americans with Disabilities Act (ADA), has encouraged widespread deployment of sign language avatar systems across education, healthcare, corporate, and public service sectors. Additionally, robust funding for R&D and collaborations between technology providers and advocacy groups have accelerated the integration of real-time avatars into mainstream communication platforms.

By Technology Type

In 2024, the AI-driven Sign Language Avatars segment commands a dominant share of 53.7% in the real-time sign language avatar market. This leadership reflects the advanced capabilities of AI technologies, including machine learning, natural language processing, and computer vision, which enable these avatars to deliver accurate, expressive, and real-time translations of spoken or written language into sign language.

These AI-driven avatars provide a natural communication experience by capturing intricate hand movements and facial expressions, ensuring inclusivity and better engagement for deaf and hard-of-hearing users. Continuous enhancements in generative AI models further equip these avatars to adapt to various dialects and contextual nuances, making them highly versatile across multiple languages and user requirements.

By Deployment Mode

In 2024, the On-Premises deployment mode holds a significant 68.9% share within the market. This preference is driven largely by organizations that prioritize data privacy, security, and operational control, especially in sensitive sectors such as healthcare and government. On-premises solutions allow for local data processing, thereby reducing latency and improving reliability critical for real-time communications in emergency or clinical contexts.

Moreover, on-premises deployments offer greater customization and integration capabilities within existing IT infrastructures, making them the favored choice for mission-critical applications that demand robust data protection and consistent avatar performance without dependency on external networks.

By End-Use Industry

In 2024, the Healthcare industry represents a substantial 38.6% of the end-use market for real-time sign language avatars. The sector’s pressing need to ensure effective communication between healthcare professionals and patients with hearing impairments drives this adoption.

Real-time sign language avatars facilitate immediate translation of consultations, medical instructions, and emergency information into sign language, improving patient understanding and reducing risks linked to miscommunication.

The healthcare industry’s increasing regulatory focus on accessibility, along with ongoing digital transformation efforts, accelerates the integration of these avatars into telemedicine, patient support, and health education platforms. This not only enhances inclusivity but also lessens reliance on human interpreters and cuts communication costs while improving patient outcomes.

Key Market Segments

By Technology Type

- AI-driven Sign Language Avatars

- Motion Capture-based Sign Language Avatars

- Hybrid Systems

By Deployment Mode

- Cloud-based

- On-premises

By End-Use Industry

- Healthcare

- Education

- Consumer Electronics

- Media and Entertainment

- Others

Regional Analysis and Coverage

- North America

- US

- Canada

- Europe

- Germany

- France

- The UK

- Spain

- Italy

- Russia

- Netherlands

- Rest of Europe

- Asia Pacific

- China

- Japan

- South Korea

- India

- Australia

- Singapore

- Thailand

- Vietnam

- Rest of Latin America

- Latin America

- Brazil

- Mexico

- Rest of Latin America

- Middle East & Africa

- South Africa

- Saudi Arabia

- UAE

- Rest of MEA

Emerging Trend

Advancements in AI-Powered Real-Time Sign Language Avatars

The real-time sign language avatar market is rapidly advancing, driven by breakthroughs in artificial intelligence, machine learning, and computer vision technologies. Modern avatars leverage deep learning to provide seamless, real-time translation of spoken or written language into sign language through lifelike 3D animated figures.

This technology is increasingly integrated into accessible communication platforms, educational tools, and healthcare applications, significantly improving inclusivity for deaf and hard-of-hearing communities. Emerging features include personalized avatars capable of representing diverse sign language dialects and more natural, expressive signing that better conveys emotion and context.

Additionally, integration with augmented reality (AR) and virtual reality (VR) creates more immersive learning environments, enhancing user engagement and retention. These developments reflect a broader societal demand for accessible communication and technological inclusivity. Real-time avatars are becoming essential tools that bridge communication gaps in everyday scenarios such as customer service, online learning, and telemedicine.

Driver Analysis

Increasing Demand for Inclusive Communication and Technological Accessibility

A primary driver of the real-time sign language avatar market is the rising global awareness and legislation promoting accessibility for people with hearing impairments. Governments, educational institutions, and healthcare providers are implementing mandates that require accessible communication tools.

Moreover, the proliferation of smartphones, high-speed internet, and cloud computing enables widespread access to these AI-powered avatars anytime, anywhere. Technological improvements in hand and facial gesture recognition, along with natural language processing, also boost market adoption.

These advancements allow for more accurate and fluid translations, overcoming one of the biggest barriers in sign language technology. Educational sectors especially benefit as avatars facilitate inclusive classrooms, remote learning, and personalized study aids.

Restraint Analysis

Challenges in Accurate Sign Language Representation and User Acceptance

Despite significant progress, the market faces critical restraints related to the complexity of accurately representing sign languages in real time. Sign languages have rich grammatical structures, nuanced expressions, and regional dialects that are difficult to capture fully with avatars.

Achieving both linguistic precision and naturalistic movement requires vast training data and sophisticated animation techniques, which remain costly and complex. Additionally, users from deaf communities often express concerns about avatar legibility and authenticity compared to human interpreters, creating hurdles for widespread acceptance.

Maintaining real-time responsiveness while ensuring smooth, expressive signing demands optimized computational resources and efficient AI models. Regulatory and ethical considerations around privacy and data security add further challenges. Overcoming these technological and social obstacles is essential for broader market penetration and trust.

Opportunity Analysis

Expansion into Education, Healthcare, and Multilingual Applications

The real-time sign language avatar market presents vast opportunities, particularly in education and healthcare sectors where communication accessibility is critical. Schools and universities increasingly incorporate avatar-based sign language translators to support inclusive learning environments for deaf students.

Healthcare providers utilize avatars to improve patient-provider communication, enhancing service quality and compliance with accessibility laws. Corporate and government services also adopt these avatars to meet diversity and inclusion goals.

Multilingual and multi-dialect avatar development is another growth area, enabling global scalability by covering various sign languages beyond American Sign Language (ASL), such as British Sign Language (BSL), Algerian Sign Language, and others.

Challenge Analysis

High Development Costs and Integration Complexity

A significant challenge for the market involves the high costs and technical complexity of developing real-time avatars that balance linguistic accuracy with engaging, natural animation. Creating comprehensive datasets for diverse sign languages requires extensive collaboration with linguistic experts and deaf communities, which demands time and resources.

Furthermore, integrating avatar technology into existing communication infrastructures and ensuring compatibility across devices and platforms can be daunting for providers. Addressing user skepticism and building trust remains essential, requiring ongoing improvements in avatar realism, ease of use, and transparency about AI limitations.

Developers must also navigate emerging regulations regarding AI ethics, data privacy, and accessibility standards, which can vary across regions. Sustained investment in research, cross-disciplinary partnerships, and user-centered design will be crucial to overcoming these barriers and enabling mainstream adoption.

Competitive Analysis

VSign, SignAll, and XRAI Glass are at the forefront of developing real-time sign language avatar solutions. VSign focuses on high-accuracy avatar animations optimized for various communication platforms. SignAll leverages computer vision and AI-driven recognition to enable precise interpretation across multiple sign languages. XRAI Glass integrates augmented reality with speech-to-text and translation features, targeting accessibility in live conversations.

MotionSavvy, Google LLC, and Microsoft have made significant advancements in scaling avatar-based accessibility solutions. MotionSavvy specializes in portable translation devices tailored for the deaf and hard-of-hearing community. Google LLC incorporates sign language avatars within its AI and cloud frameworks, enhancing inclusivity across its product suite. Microsoft invests in avatar-driven accessibility for virtual meetings and collaboration tools.

AvatarMed, Amazon Web Services, Inc., Signapse, Ltd., Kara Technologies Ltd., and other emerging players contribute diverse innovations to the market. AvatarMed focuses on healthcare applications, enabling sign language support in telemedicine platforms. AWS offers scalable cloud infrastructure that supports real-time avatar rendering and AI processing.

Top Key Players in the Market

- VSign

- SignAll

- XRAI Glass

- MotionSavvy

- Google LLC

- Microsoft

- AvatarMed

- Amazon Web Services, Inc.

- Signapse, Ltd.

- Kara Technologies Ltd.

- Others

Recent Developments

- In May 2025, Google unveiled “SignGemma,” its most advanced AI model designed to translate sign language into spoken text in real time. This breakthrough tool is currently in testing phase and is expected for wide public release by the end of 2025. SignGemma supports American Sign Language (ASL) and English, promising to bridge major communication gaps for millions of Deaf and hard-of-hearing individuals worldwide.

- Microsoft, in partnership with Israeli AI firm D-ID, revealed in March 2025 their integration of lifelike AI avatars into their Azure platform, aimed at real-time sign language translation and improved accessibility for the Deaf and Hard of Hearing. This advancement goes beyond typical avatars by simulating natural human interaction with voice, facial expressions, and sign language, making communication more intuitive.

Report Scope

Report Features Description Base Year for Estimation 2024 Historic Period 2020-2023 Forecast Period 2025-2034 Report Coverage Revenue forecast, AI impact on Market trends, Share Insights, Company ranking, competitive landscape, Recent Developments, Market Dynamics and Emerging Trends Segments Covered By Technology Type (AI-driven Sign Language Avatars, Motion Capture-based Sign Language Avatars, Hybrid Systems), By Deployment Mode (Cloud-based, On-premises), By End-Use Industry (Healthcare, Education, Consumer Electronics, Media and Entertainment, Others) Regional Analysis North America – US, Canada; Europe – Germany, France, The UK, Spain, Italy, Russia, Netherlands, Rest of Europe; Asia Pacific – China, Japan, South Korea, India, New Zealand, Singapore, Thailand, Vietnam, Rest of Latin America; Latin America – Brazil, Mexico, Rest of Latin America; Middle East & Africa – South Africa, Saudi Arabia, UAE, Rest of MEA Competitive Landscape VSign, SignAll, XRAI Glass, MotionSavvy, Google LLC, Microsoft, AvatarMed, Amazon Web Services, Inc., Signapse, Ltd., Kara Technologies Ltd., Others Customization Scope Customization for segments, region/country-level will be provided. Moreover, additional customization can be done based on the requirements. Purchase Options We have three license to opt for: Single User License, Multi-User License (Up to 5 Users), Corporate Use License (Unlimited User and Printable PDF)  Real-Time Sign Language Avatar MarketPublished date: August 2025add_shopping_cartBuy Now get_appDownload Sample

Real-Time Sign Language Avatar MarketPublished date: August 2025add_shopping_cartBuy Now get_appDownload Sample -

-

- VSign

- SignAll

- XRAI Glass

- MotionSavvy

- Google LLC

- Microsoft

- AvatarMed

- Amazon Web Services, Inc.

- Signapse, Ltd.

- Kara Technologies Ltd.

- Others