Global Micro Large Language Models (Micro LLMs) Market Size, Decision-Making Report By Solution (Software (General Purpose LLMs, Domain Specific LLMs, Multilingual LLMs, Others), Services (Consulting, Development & Training, Support & Maintenance)), By Application (Customer Service, Content Generation, Sentiment Analysis, Code Generation, Chatbots and Virtual Assistant, Language Translation, Others), By Deployment (Cloud, On-premises), By Industry Vertical(IT & Telecom, Healthcare, BFSI, Retail and E-commerce, Media and Entertainment, Others), By Region and Companies - Industry Segment Outlook, Market Assessment, Competition Scenario, Trends and Forecast 2025-2034

- Published date: August 2025

- Report ID: 154723

- Number of Pages: 305

- Format:

-

keyboard_arrow_up

Quick Navigation

Report Overview

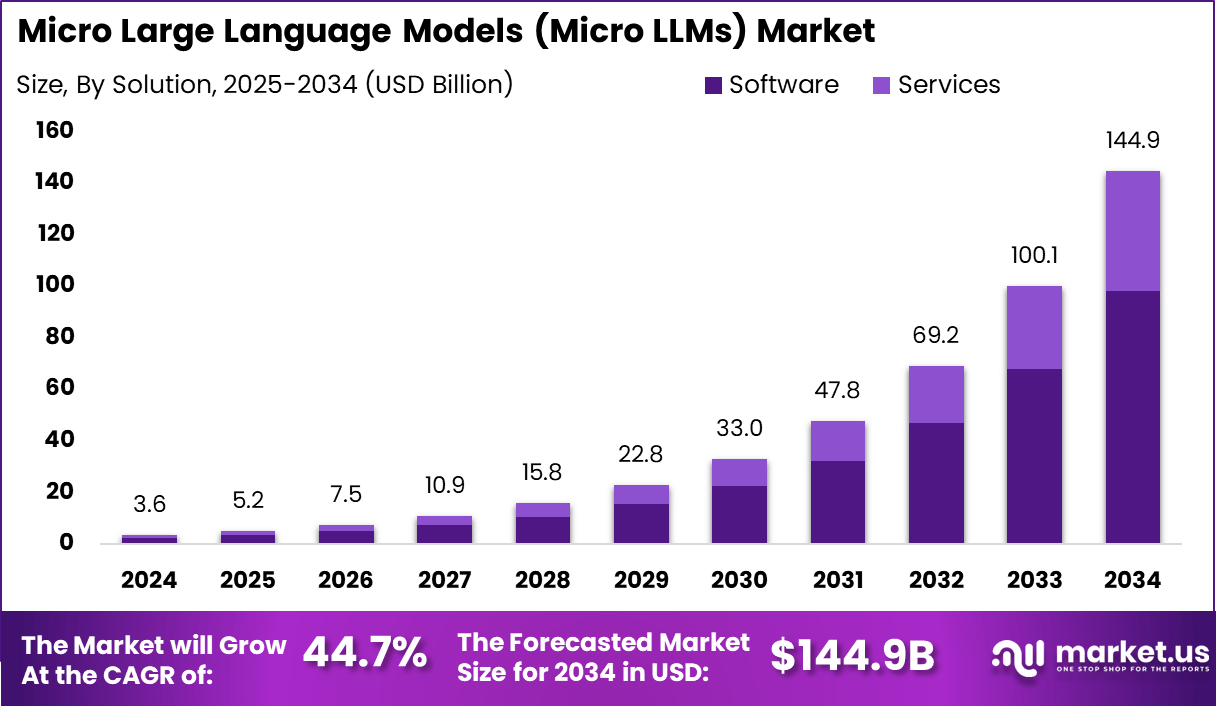

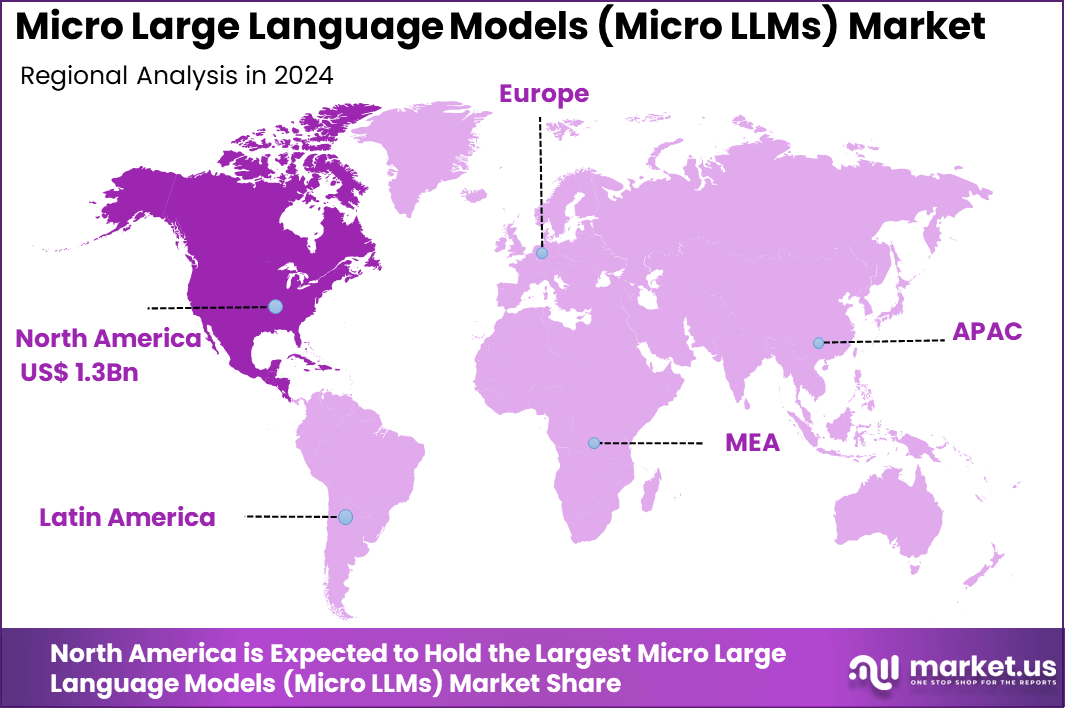

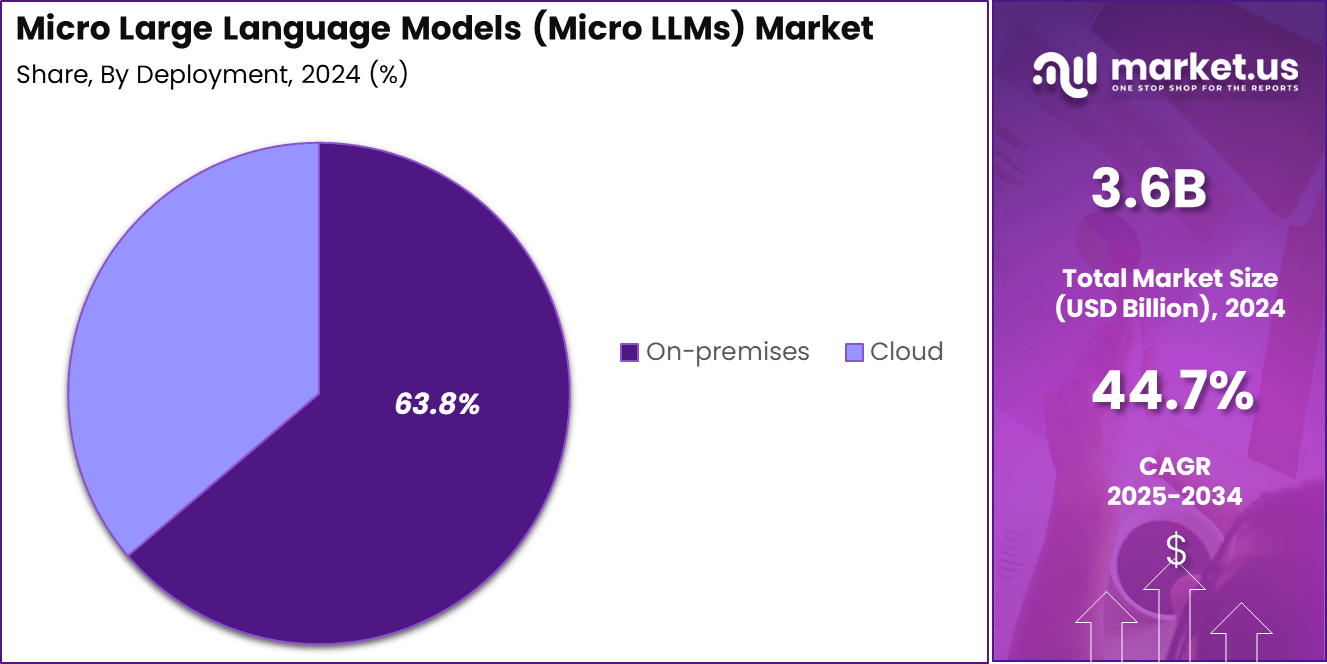

The Global Micro Large Language Models (Micro LLMs) Market size is expected to be worth around USD 144.9 Billion By 2034, from USD 3.6 billion in 2024, growing at a CAGR of 44.7% during the forecast period from 2025 to 2034. In 2024, North America held a dominan Market position, capturing more than a 38.7% share, holding USD 1.3 Billion revenue.

The Micro LLMs market focuses on compact language models built with limited parameters to handle specific tasks in areas like finance, logistics, and coding. These models are gaining popularity due to their low resource needs and ability to run efficiently on edge devices. Enterprises prefer them for domain-specific use, avoiding the high cost and complexity of large-scale models. The rise of open-source tools has further boosted access and innovation across industries.

The top driving factor for the Micro LLMs market is the surge in demand for efficient, cost-effective AI that meets the needs of industries requiring fast, accurate, and privacy-conscious solutions. Industries are witnessing a shift towards task-specific language models because these can achieve high performance at much lower investment, both in terms of time and money.

According to Market.us, Large Language Model (LLM) Market is projected to witness robust expansion, rising from USD 4.5 Billion in 2023 to approximately USD 82.1 Billion by 2033, progressing at a CAGR of 33.7% between 2024 and 2033. This accelerated growth can be attributed to the increasing enterprise demand for advanced NLP solutions, rapid advancements in generative AI capabilities, and growing investments in AI infrastructure across industries.

The increasing adoption of technologies such as knowledge graphs, GraphRAG architectures, and modular model ensembles has enhanced the effectiveness of Micro LLMs. These technologies supply structured-context support and continuous data updates, allowing domain‑focused models to remain relevant and accurate over time. That combination yields faster responses and reduced hallucination compared to standalone LLMs.

Market Size and Growth

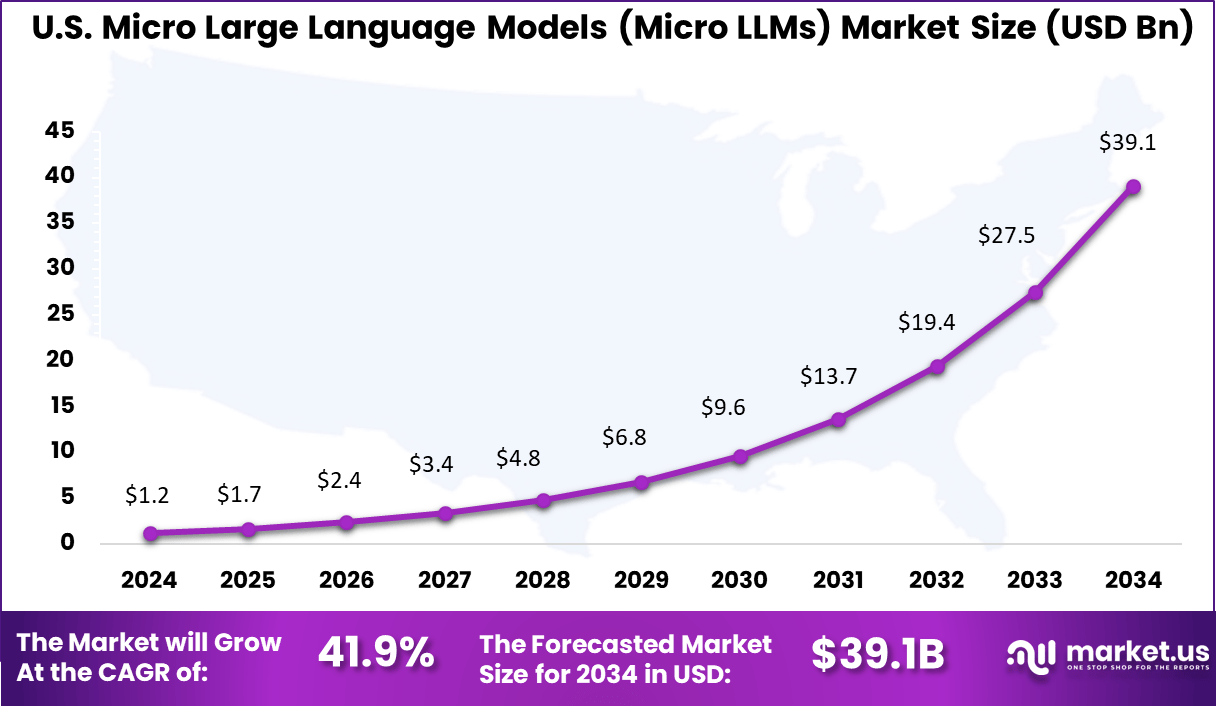

Metric Statistic / Value Market Value (2024) USD 3.6 Bn Forecast Revenue (2034) USD 144.9 Bn CAGR(2025-2034) 44.7% Leading Segment Software: 67.7% Region with Largest Share North America [38.7% Market Share] Largest Country U.S. [USD 1.18 Bn Market Revenue], CAGR: 41.9% Key Insight Summary

- The global market is projected to grow from USD 3.6 billion in 2024 to approximately USD 144.9 billion by 2034, marking a substantial CAGR of 44.7%, driven by demand for efficient, domain-specific AI models.

- North America led the global landscape with a 38.7% share, generating USD 1.3 billion in revenue, reflecting rapid enterprise adoption and strong AI R&D ecosystems.

- The U.S. market alone contributed USD 1.18 billion and is expected to grow at a CAGR of 41.9%, backed by increasing demand for AI that balances performance, privacy, and deployment flexibility.

- The Software segment dominated by solution, holding 67.7%, as organizations prioritize lightweight, adaptable LLMs integrated into existing applications and platforms.

- Chatbots and Virtual Assistants accounted for 30.5% of applications, as Micro LLMs enable fast, cost-efficient, and customizable conversational AI across customer service and enterprise tools.

- The On-premises deployment model captured 63.8%, driven by data-sensitive industries seeking control, privacy, and compliance in localized LLM deployments.

- IT & Telecom emerged as the top vertical with a 33.9% share, leveraging Micro LLMs for network automation, internal operations, and enhanced user interaction.

Analysts’ Viewpoint

The Micro LLM market presents strong investment potential, as businesses reassess their AI strategies for improved performance and reduced costs. Venture capital and consulting firms are increasingly focused on platforms that support fine-tuning, transfer learning, and efficient model building. Customization and integration of Micro LLMs are gaining momentum, especially in finance, healthcare, IT, and retail, where scalable, sector-specific AI solutions are in demand.

Enterprises adopting Micro LLMs report lower operational costs, faster service delivery, and consistent output quality with fewer resources. The ability to run these models on local or edge devices offers added benefits, including better data control and compliance with internal protocols. This flexibility enables businesses to align AI capabilities with specific objectives while maintaining security and regulatory standards.

From a regulatory perspective, Micro LLMs are well-suited to meet strict global privacy laws, as they can be deployed in environments that respect data sovereignty. Their localized deployment offers a key advantage over large cloud-based models. However, the absence of universal performance benchmarks remains a key challenge. To ensure their reliability and safety in critical applications, there is a growing need for joint efforts among industry players, academics, and regulators to establish trustworthy evaluation standards.

Role of AI

Role/Function Description On-Device Intelligence Enables AI-powered apps to run without cloud (improving speed, privacy, offline capability) Customized & Edge Inference Delivers domain-specific and real-time responses for mobile, IoT, and embedded applications Energy Efficient NLP AI advances allow small-footprint models to deliver strong language capabilities with minimal power Privacy-Preserving AI Data stays on-device, helping comply with GDPR/CCPA and boosting user trust Democratization of AI Makes LLM tech accessible to smaller firms, developers, and consumer devices US Market Size

The U.S. Micro Large Language Models (Micro LLMs) Market was valued at USD 1.2 Billion in 2024 and is anticipated to reach approximately USD 39.1 Billion by 2034, expanding at a compound annual growth rate (CAGR) of 41.9% during the forecast period from 2025 to 2034.

The United States is leading the Micro Large Language Models (Micro LLMs) Market due to a combination of advanced AI infrastructure, early adoption by enterprises, and strong government and private sector investment in AI innovation. The country has been at the forefront of foundational model research and development, supported by a mature digital ecosystem and extensive cloud infrastructure.

In 2024, North America held a dominant market position, capturing more than a 38.7 % share, with USD 1.3 billion in revenue; this commanding lead can be attributed to the region’s advanced AI infrastructure, significant investment from leading technology firms, and early adoption of Micro LLM solutions.

Economies in the United States and Canada benefit from mature cloud environments, pre‑existing AI research ecosystems, and strong partnerships between academic institutions and industry. These factors have accelerated the commercialization of compact language models optimized for enterprise edge deployment.

Emerging Trends

Trend/Innovation Description Model Compression & Distillation Techniques that create highly compact, fast models without major accuracy tradeoffs Multimodal Micro Models Integration of speech, vision, and NLU within lightweight architectures for smarter devices On-Device Personalization Adaptive models that customize to the user/device over time without cloud retraining Open-Source Expansion Community-driven micro LLMs accelerate innovation, transparency, and cost-effective integration Privacy-First AI Growth of privacy-preserving architectures, federated learning, and differential privacy in micro LLM design Growth Factors

Key Factors Description Edge & On-Device AI Demand Drive for fast, private, offline AI assistants in smartphones, vehicles, smart devices Regulatory Pressure Need to process sensitive data on-device (health, finance) to meet privacy laws Cost & Latency Reduction Micro LLMs reduce cloud/server costs and user waiting times for inference Hardware/Model Efficiency Advances in quantization, pruning, and smaller architectures enable more powerful-yet-efficient language models Industry-Specific Applications Surge in verticalized models (e.g., healthcare, logistics) that require compact, fast deployment at the device edge By Solution Analysis

In 2024, Software solutions lead the way in the Micro LLM market, accounting for 67.7% of total share. Enterprises are increasingly opting for lighter, fine-tuned language model software because it provides targeted capabilities without the heavy resource requirements of massive models. These micro models are optimized for specialized enterprise tasks, delivering strong performance on specific datasets while keeping both costs and latency to a minimum.

The rise of easy-to-integrate software platforms enables organizations to launch AI-driven initiatives quickly, making micro LLM software the backbone for scalable, agile deployment across a wide range of business functions – especially where privacy and customization matter most.

By Application

In 2024, Chatbots and virtual assistants represent the largest use case for micro LLMs, with a 30.5% market share. Organizations favor these compact yet powerful models for powering automated conversations in customer support, IT helpdesks, and digital commerce.

Their ability to deliver fast, accurate, and personalized interactions – often with lower hardware costs and minimal footprint – makes micro LLMs ideal for embedding AI into websites, mobile apps, and internal workflows. Compared to general-purpose massive LLMs, micro models offer high efficiency for routine tasks, processing large volumes of queries without sacrificing response quality or data privacy.

By Deployment

In 2024, On-premises deployment is the dominant model for micro LLMs (63.8%), reflecting heightened enterprise needs for control, security, and compliance. With micro LLMs, organizations can deploy powerful AI models within their own infrastructure, ensuring that sensitive data never leaves secure environments.

This is particularly valued by sectors handling proprietary or regulated information, where maximum privacy and tailored performance are priorities. Advances in deployment tools and hardware have reduced the complexity of running LLMs locally, allowing even mid-sized firms to own and manage their AI stacks while minimizing ongoing costs.

By Industry Vertical

In 2024, the IT and telecom sector leads in adopting micro LLMs, holding 33.9% market share. These industries leverage micro models to streamline operations – from automating network management and ticketing to powering smart customer interactions and maintaining cybersecurity protocols.

The rapid shift to digital operations and the need for scalable AI solutions that balance efficiency, cost, and privacy make micro LLMs particularly attractive for IT and telecom organizations. Their customizable nature and robust integration capabilities allow for use cases that larger, less-focused models often cannot match, positioning micro LLMs as essential tools for digital transformation across the sector.

Key Market Segments

By Solution

- Software

- General Purpose LLMs

- Domain Speacific LLMs

- Multilingual LLMs

- Others

- Services

- Consulting

- Development & Training

- Support & Maintenance

By Application

- Customer Service

- Content Generation

- Sentiment Analysis

- Code Generation

- Chatbots And Virtual Assistant

- Language Translation

- Others

By Deployment

- Cloud

- On-premises

By Industry Vertical

- IT & Telecom

- Healthcare

- BFSI

- Retail And E-commerce

- Media And Entertainment

- Others

Key Regions and Countries

- North America

- US

- Canada

- Europe

- Germany

- France

- The UK

- Spain

- Italy

- Russia

- Netherlands

- Rest of Europe

- Asia Pacific

- China

- Japan

- South Korea

- India

- Australia

- Singapore

- Thailand

- Vietnam

- Rest of Latin America

- Latin America

- Brazil

- Mexico

- Rest of Latin America

- Middle East & Africa

- South Africa

- Saudi Arabia

- UAE

- Rest of MEA

Driver

Demand for Efficient, Task-Specific AI

A primary force steering the micro LLMs market forward is the high demand for agile, cost-effective, and specialized artificial intelligence solutions. As organizations become increasingly digital, they face a need for tools that address very specific tasks – whether it’s generating custom code snippets, analyzing environmental data on the edge, or aiding with customer service responses directly on users’ devices.

Unlike traditional large-scale models that consume massive resources, micro LLMs are built to operate smoothly on limited hardware, making them ideal for mobile apps, embedded systems, or local enterprise servers. This efficiency lets businesses and developers implement advanced AI without the headaches of high cloud costs or privacy concerns tied to sending sensitive data off-device.

In addition, micro LLMs are often easier to fine-tune for sector-specific needs. For instance, a healthcare provider can deploy an in-house AI assistant trained solely on medical guidelines, ensuring accuracy and compliance without risking data leakage. This adaptability, paired with low computational demands, opens creative and practical avenues – especially where cloud connectivity is unreliable or where data must stay strictly on-premises.

Restraint

Limitations in Performance and Scalability

Despite their growing popularity, micro LLMs come with a significant restraint: they can’t always match the depth, reasoning power, or multitasking abilities of larger language models. Because they are trained on smaller, more specialized datasets, micro LLMs may lack the rich contextual awareness or nuanced understanding needed for complex tasks, such as multi-step reasoning or cross-domain problem-solving.

Enterprises sometimes find that while micro LLMs are fantastic for targeted use-cases, they stumble when faced with broader requirements, limiting where and how these solutions can be deployed effectively. These limitations can create friction, especially for organizations who hope to eventually scale their AI usage.

For example, a logistics company using micro LLMs for quick in-warehouse decision support might later want advanced analytics or dynamic scenario planning, only to discover that significant upgrades – or a switch to a macro model – are necessary. This potential for growth “dead ends” means organizations must carefully balance their current needs against their future ambitions, often resulting in a hybrid adoption strategy but complicating implementation and resource allocation.

Opportunity

Widening Access and Democratization of AI

Micro LLMs open the door to a tremendous opportunity- the true democratization of artificial intelligence. By drastically lowering the technical and financial entry barriers, these models empower a far wider community of developers, educators, startups, and even non-technical professionals to leverage advanced AI for solving local or niche challenges.

Whether in rural farming, local healthcare clinics, or resource-strapped schools, the ability to run powerful language models on affordable devices with minimal energy or network needs means real-world impact is no longer restricted to tech giants or well-funded labs. This accessibility reaches beyond direct users as well.

Open-source ecosystems and grassroots collaborations are flourishing around micro LLMs, driving technical innovation and rapid knowledge sharing. This communal environment is helping push both the science and standards of responsible deployment, with more eyes on issues like bias, privacy, and safe usage. Ultimately, micro LLMs are building a future where everyone—from a smartphone owner in a network-limited region to an educator in a small-town classroom – can access and shape how AI is put to work for human good.

Challenge

Balancing Security, Privacy, and Responsible Use

The journey for micro LLMs is not without formidable challenges. Deploying these smaller models in sensitive industries like healthcare, finance, or government requires careful navigation of technical and ethical concerns. Even though micro LLMs may reduce the risk of mass data exposure compared to cloud reliance, they still face heightened scrutiny around data security, regulatory compliance, and risk of misuse.

A lightweight, locally run model can become a vulnerability if not properly maintained or vetted – not just technically, but in terms of transparency and accountability. There’s also the ongoing struggle to balance open innovation with responsible governance. Open-source communities drive fast improvements, but without strict standards or oversight, there’s greater potential for the spread of models that may not be fully reliable, fair, or safe.

Competitive Landscape

In the Micro Large Language Models (Micro LLMs) market, Microsoft, Google LLC, and OpenAI LP are recognized as major technology leaders. These companies have significantly advanced small-scale LLMs through investments in fine-tuning, edge computing integration, and AI model optimization. Their continued focus on responsible AI and language safety features has enhanced trust across enterprises.

Another prominent group includes Meta Platforms, Inc., Amazon.com, Inc., and Alibaba Group Holding Limited. These firms have scaled Micro LLM deployment for commercial chatbots, developer tools, and localized digital assistants. With strong data infrastructure and AI research arms, they are focusing on high-efficiency LLMs that can run on mobile and edge devices.

Asia-based leaders such as Huawei Technologies Co., Ltd., Tencent Holdings Limited, Baidu, Inc., and Yandex NV are also expanding their presence. These players focus on language-specific model training, edge AI hardware compatibility, and data sovereignty. Regional innovation hubs and strong government backing have supported these advancements. Their Micro LLMs are increasingly adopted for smart city projects, fintech applications, and multilingual customer service tools.

Top Key Players in the Market

- Alibaba Group Holding Limited

- Amazon.com, Inc.

- Baidu, Inc.

- Google LLC

- Huawei Technologies Co., Ltd.

- Meta Platforms, Inc.

- Microsoft

- OpenAI LP

- Tencent Holdings Limited

- Yandex NV

- Others

Recent Developments

- In January 2025, Alibaba Cloud globally launched new Qwen micro-LLMs, notably the Qwen2.5 series (7B–72B parameters), expanding its Model Studio offering. These models empower global developers with affordable and efficient AI solutions suitable for specialized tasks and edge devices.

- Baidu announced Ernie 5 in February 2025, slated for launch in the second half of 2025. This micro-LLM will have multimodal abilities-processing text, video, images, and audio. This is a direct response to surging market competition and the emergence of highly optimized, cost-effective models like DeepSeek from competitors.

- Google has quickly caught up with massive advances in the Gemini family. In early 2025, the Gemini 2.5 Flash-Lite model became one of their fastest, most efficient micro-LLMs, offering 1M+ token context windows at extremely low latency, suitable for real-time and edge scenarios.

Report Scope

Report Features Description Base Year for Estimation 2024 Historic Period 2020-2023 Forecast Period 2025-2034 Report Coverage Revenue forecast, AI impact on Market trends, Share Insights, Company ranking, competitive landscape, Recent Developments, Market Dynamics and Emerging Trends Segments Covered By Solution (Software (General Purpose LLMs, Domain Specific LLMs, Multilingual LLMs, Others), Services (Consulting, Development & Training, Support & Maintenance)), By Application (Customer Service, Content Generation, Sentiment Analysis, Code Generation, Chatbots and Virtual Assistant, Language Translation, Others), By Deployment (Cloud, On-premises), By Industry Vertical(IT & Telecom, Healthcare, BFSI, Retail and E-commerce, Media and Entertainment, Others) Regional Analysis North America – US, Canada; Europe – Germany, France, The UK, Spain, Italy, Russia, Netherlands, Rest of Europe; Asia Pacific – China, Japan, South Korea, India, New Zealand, Singapore, Thailand, Vietnam, Rest of Latin America; Latin America – Brazil, Mexico, Rest of Latin America; Middle East & Africa – South Africa, Saudi Arabia, UAE, Rest of MEA Competitive Landscape Alibaba Group Holding Limited, Amazon.com, Inc., Baidu, Inc., Google LLC, Huawei Technologies Co., Ltd., Meta Platforms, Inc., Microsoft, OpenAI LP, Tencent Holdings Limited, Yandex NV, Others Customization Scope Customization for segments, region/country-level will be provided. Moreover, additional customization can be done based on the requirements. Purchase Options We have three license to opt for: Single User License, Multi-User License (Up to 5 Users), Corporate Use License (Unlimited User and Printable PDF)

-

-

- Alibaba Group Holding Limited

- Amazon.com, Inc.

- Baidu, Inc.

- Google LLC

- Huawei Technologies Co., Ltd.

- Meta Platforms, Inc.

- Microsoft

- OpenAI LP

- Tencent Holdings Limited

- Yandex NV

- Others