Global AI Inference Gateways Market Size, Share Report By Component (Software, Hardware, Services), By Deployment Mode (On-Premises, Cloud), By Application (Healthcare, Finance, Retail, Manufacturing, Automotive, IT and Telecommunications, Others), By Enterprise Size (Small and Medium Enterprises, Large Enterprises), By End-User (BFSI, Healthcare, Retail and E-commerce, Media and Entertainment, Manufacturing, IT and Telecommunications, Others), By Regional Analysis, Global Trends and Opportunity, Future Outlook By 2025-2034

- Published date: Dec. 2025

- Report ID: 169343

- Number of Pages: 215

- Format:

-

keyboard_arrow_up

Quick Navigation

- Report Overview

- Key Takeaway

- Role of Generative AI

- Investment and Business Benefits

- U.S. Market Size

- Component Analysis

- Deployment Mode Analysis

- Application Analysis

- Enterprise Size Analysis

- End-User Analysis

- Emerging trends

- Growth Factors

- Key Market Segments

- Drivers

- Restraint

- Opportunities

- Challenges

- Key Players Analysis

- Company Use case and Benefits for customers

- Recent Developments

- Future Outlook with Opportunities

- Report Scope

Report Overview

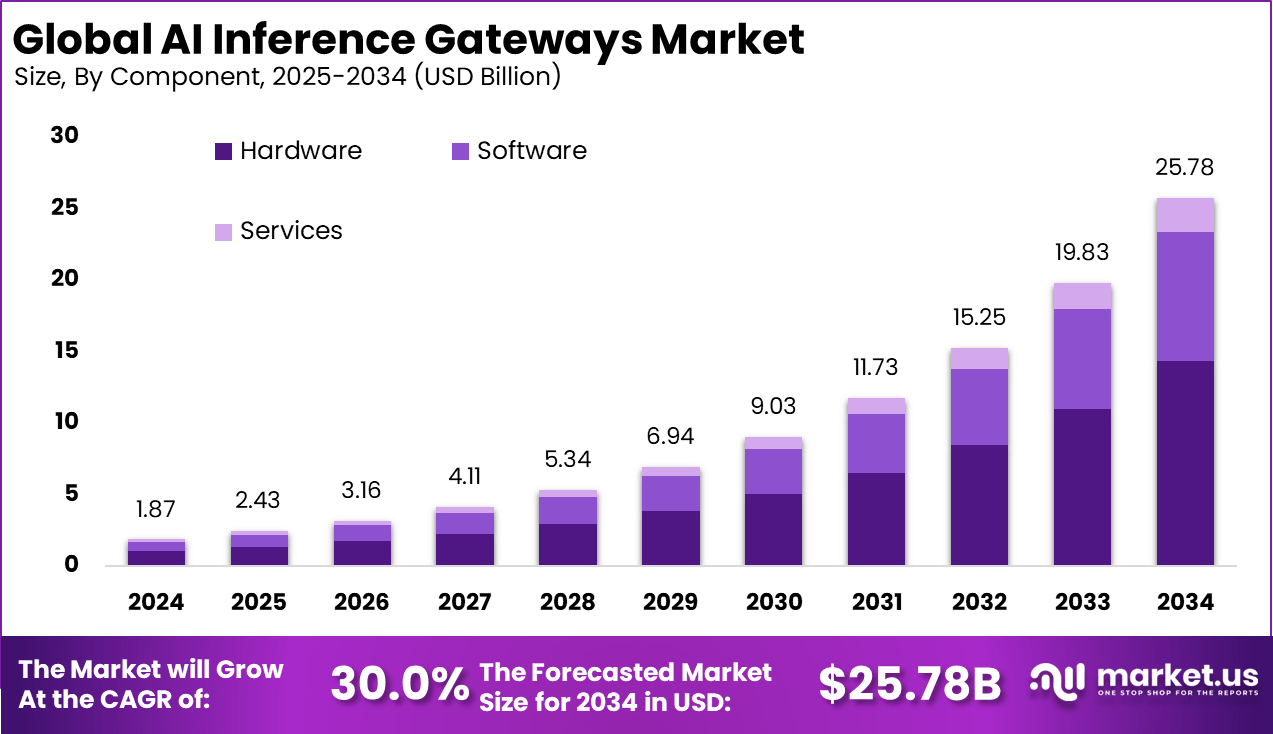

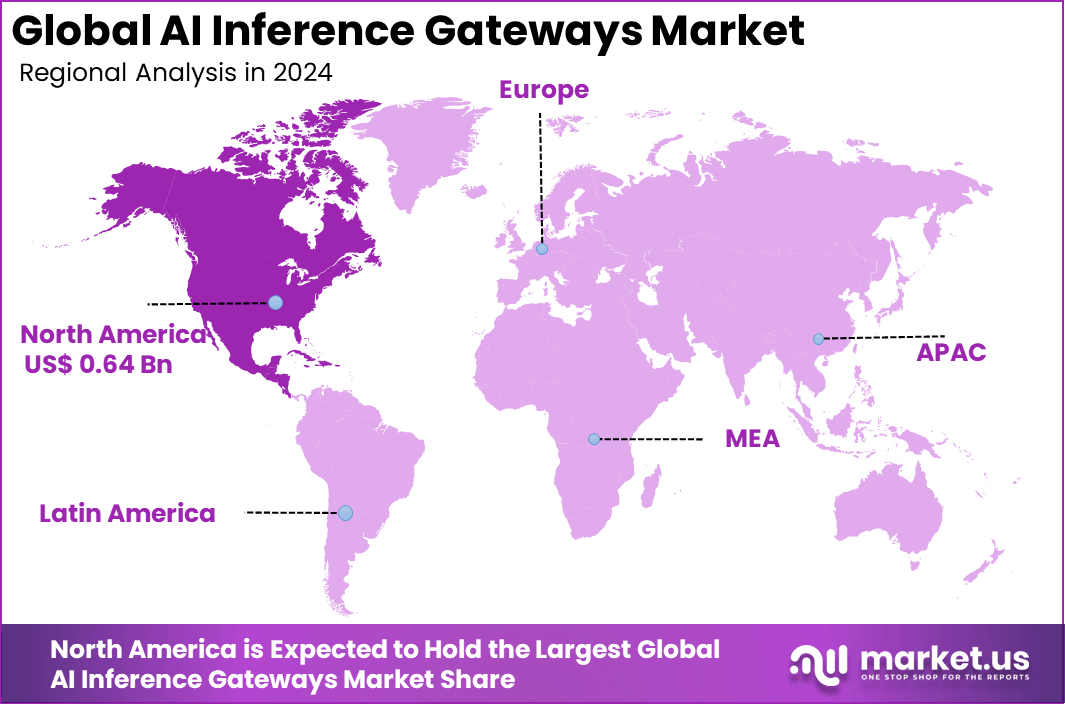

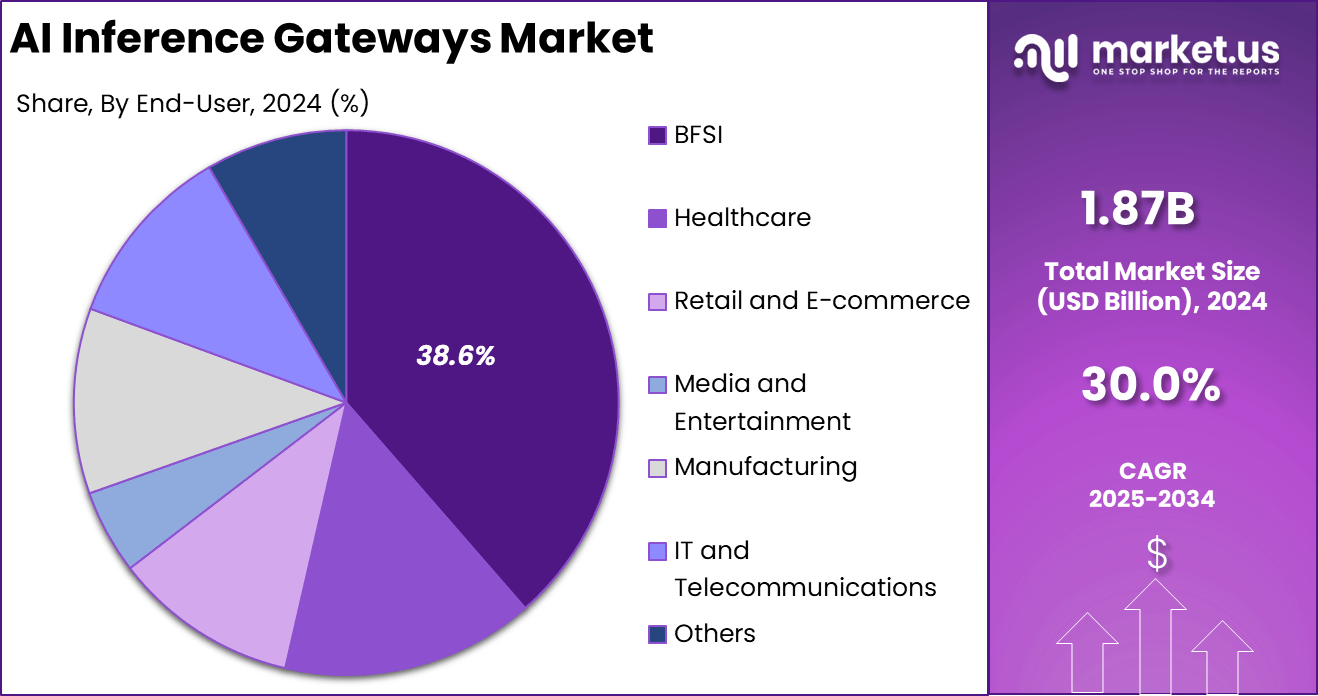

The Global AI Inference Gateways Market size is expected to be worth around USD 25.78 billion by 2034, from USD 1.87 billion in 2024, growing at a CAGR of 30.0% during the forecast period from 2025 to 2034. North America held a dominant market position, capturing more than a 34.6% share, holding USD 0.64 billion in revenue.

The AI inference gateways market has expanded as organisations move from AI model development to large scale real time deployment. Growth reflects rising demand for fast, secure and controlled delivery of AI predictions across digital services. Inference gateways now serve as the traffic control layer between AI models and end users, managing requests, responses and compute efficiency across cloud, edge and hybrid environments.

The primary forces driving AI inference gateways are the need for immediate decisions in industries such as retail and automotive, where real-time data processing is critical. By enabling analytics at the edge, these gateways reduce the load on central networks during busy periods, improving responsiveness. The demand for low latency when running generative AI models increases the value of placing inference closer to users, with faster parallel processing capabilities significantly cutting delays.

For instance, in December 2025, AWS unveiled Flexible Training Plans for SageMaker AI inference endpoints at re: Invent, guaranteeing GPU capacity with vLLM streaming for agentic apps. Paired with bidirectional audio/text support, it’s pushing low-latency inference into everyday production use.

Key Takeaway

- Hardware dominated with 55.6% in 2024, showing that performance-intensive AI workloads continue to rely on dedicated physical gateways for faster inference.

- On-premises deployment captured 74.7%, reflecting strong preference for local control, low latency, and strict data security in real-time AI processing.

- The finance segment held 39.7%, highlighting heavy use of AI inference for fraud detection, real-time risk scoring, and transaction monitoring.

- Large enterprises represented 70.4%, confirming that complex, high-volume AI environments remain concentrated within large organizations.

- The BFSI segment accounted for 38.6%, driven by rising demand for secure, real-time decision systems across banking and financial services.

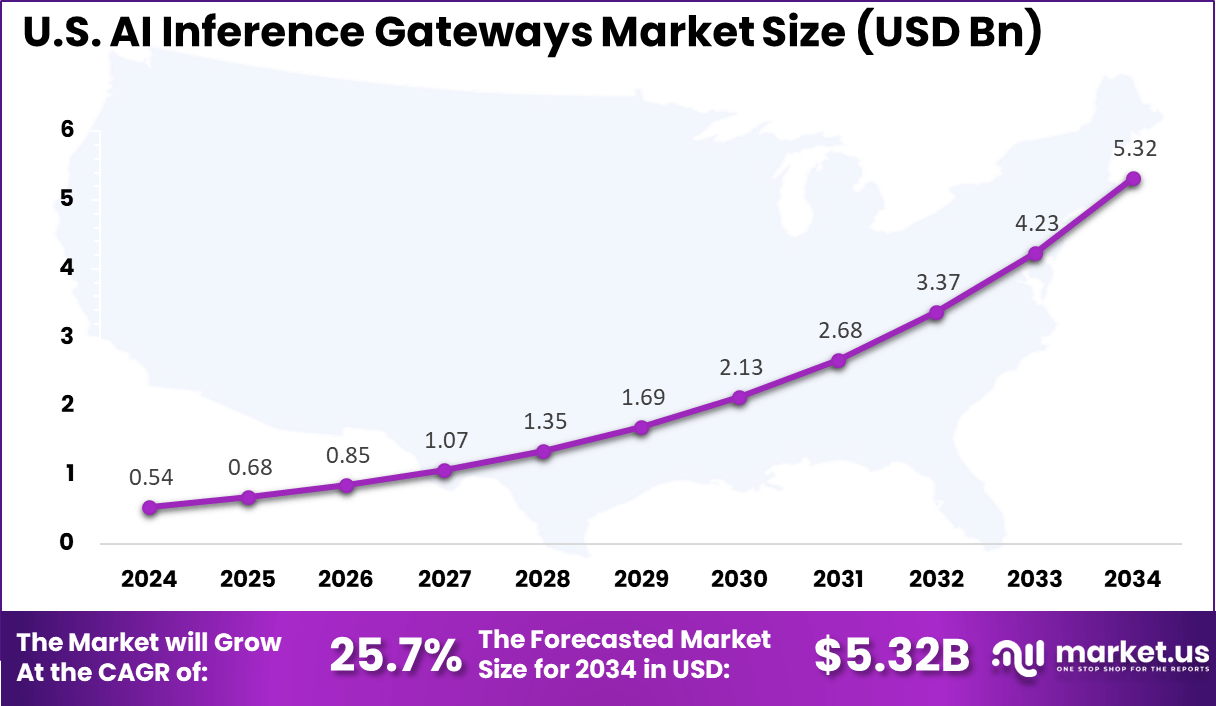

- The U.S. market reached USD 0.54 billion in 2024 and is expanding at a strong 25.7% CAGR, reflecting rapid acceleration of edge AI and real-time analytics adoption.

- North America held 34.6% of the global market, supported by advanced AI infrastructure, early enterprise adoption, and strong cloud-to-edge integration.

Role of Generative AI

Generative AI serves a crucial function in AI inference gateways by enabling the creation of complex content such as text, images, and audio during live interactions. These gateways efficiently manage how requests are directed to different AI models, ensuring quick and accurate results.

A significant portion of AI workloads today involves generative AI, reflecting the high demand for real-time content generation. This integration helps inference gateways support advanced capabilities like enhancing responses with updated information, improving the overall reliability of AI outputs in various applications.

With the rise of large-scale models containing billions of parameters, inference gateways have adapted to manage high volumes of data processing without compromising speed. Most of the investments in AI infrastructure focus on strengthening the systems that support these generative AI tasks. This trend highlights the growing reliance on inference gateways to handle the complex processing demands that come with deploying generative AI at scale.

Investment and Business Benefits

Investment opportunities in AI inference gateways focus mainly on edge computing, where processing data locally meets strict data privacy rules and reduces latency. Custom gateway solutions designed for specific industries like retail or energy offer potential for value creation by addressing specialized data handling needs.

Growth in hybrid AI systems, combining cloud and edge resources, attracts funding due to their ability to deliver flexible, scalable AI processing. Investing in technologies that support distributed inference setups can help unlock efficiencies and cater to expanding markets requiring real-time, localized AI capabilities. AI inference gateways improve business operations by reducing the need to send large amounts of data back and forth, cutting bandwidth use, and lowering network costs.

They increase the reliability of applications by processing data in real time, which enhances user satisfaction and operational uptime. These gateways help companies optimize their hardware usage, such as GPUs, by sharing resources efficiently and focusing computing power on the most demanding tasks. The result is lower operational costs and better performance, making AI initiatives more sustainable and productive across different industries.

U.S. Market Size

The market for AI Inference Gateways within the U.S. is growing tremendously and is currently valued at USD 0.54 billion, the market has a projected CAGR of 25.7%. This growth is driven by increasing adoption of AI in sectors like finance and BFSI, where real-time data processing and low-latency inference are critical.

Enterprises demand robust hardware and secure on-premises deployments to comply with strict data privacy regulations. Advances in AI hardware, cloud collaborations, and edge computing are accelerating uptake. Large enterprises’ focus on AI-driven automation and quick decision-making further fuels market expansion, positioning the U.S. as a major growth hub.

For instance, in October 2025, Google Cloud enhanced its AI Hypercomputer with GKE Inference Gateway, now generally available, featuring prefix-aware load balancing that dramatically reduces latency for recurring AI prompts while optimizing throughput and costs on Cloud TPUs. This innovation solidifies U.S.-based hyperscalers’ lead in efficient, model-aware inference serving for enterprise-scale deployments.

In 2024, North America held a dominant market position in the Global AI Inference Gateways Market, capturing more than a 34.6% share, holding USD 0.64 billion in revenue. This dominance is due to its advanced technology infrastructure and a high concentration of AI innovators.

The region benefits from extensive investments in AI hardware and cloud services, supported by leading enterprises in sectors like finance and BFSI. Strong regulatory frameworks and a focus on data security drive adoption of on-premises deployments. Additionally, North America’s robust research ecosystem and early adoption culture accelerate market growth and innovation in AI inference gateways.

For instance, in November 2025, Microsoft integrated AI Gateway directly into Azure API Management within Microsoft Foundry at Ignite 2025, enabling seamless governance, observation, and security for AI inference workloads. This positions Azure as a leader in enterprise AI access layers, supporting adaptive routing and quota management for North America’s dominant cloud AI ecosystem.

Component Analysis

In 2024, The Hardware segment held a dominant market position, capturing a 55.6% share of the Global AI Inference Gateways Market. This dominance is due to hardware’s ability to handle complex, real-time data processing requirements essential for AI workflows. Its robustness ensures that AI models perform without lag, which is critical for applications that require immediate responses and high efficiency.

The reliance on dedicated hardware is especially strong in environments where speed and power cannot be compromised. Organizations tend to favor hardware-based solutions as these provide consistent, high-performance computing and lower latency compared to software-only options. This translates into smoother operations and more reliable AI outcomes.

For Instance, in October 2025, NVIDIA shared updates on hardware optimizations that boost AI inference performance through Pareto curves. Their work with Google Cloud on A4X Max VMs uses NVIDIA GB300 systems for low-latency inference. This hardware focus helps run frontier models smoothly. Teams see big gains in speed and efficiency.

Deployment Mode Analysis

In 2024, the On-Premises segment held a dominant market position, capturing a 74.7% share of the Global AI Inference Gateways Market. This preference is driven by organizations’ need to maintain strict control over their data, ensuring compliance with privacy regulations and reducing risks associated with data transfer to third parties.

Moreover, on-premises deployment cuts down on latency since the AI inference happens close to the source of data. This setup is especially beneficial in industries where real-time decision-making is critical, such as finance and healthcare. The control and speed advantages make on-premises gateways the favored choice for many enterprises.

For instance, in December 2025, AWS launched sovereign AI on-premises with new AI Factories for governments and regulated sectors. These act like private regions with secure, low-latency access to compute and storage. Customers use their own facilities while AWS manages deployment. It speeds up timelines for data-sensitive workloads.

Application Analysis

In 2024, The Finance segment held a dominant market position, capturing a 39.7% share of the Global AI Inference Gateways Market. Financial institutions utilize these gateways to quickly analyze risks, detect fraud, and understand customer behavior in real time. The ability to deliver fast, reliable outputs is vital to their operations, where every millisecond counts.

This sector’s high adoption rate stems from its requirement for both speed and security. AI inference gateways enable banks and financial organizations to run complex models efficiently while safeguarding sensitive financial data, aligning well with regulatory demands and operational needs.

For Instance, in July 2025, IBM and AWS joined forces to blend data analytics with cloud infrastructure for business AI. The partnership targets finance apps needing real-time inference on structured data. It breaks down barriers for GenAI in risk checks and customer insights. Financial firms gain faster, compliant processing.

Enterprise Size Analysis

In 2024, The Large Enterprises segment held a dominant market position, capturing a 70.4% share of the Global AI Inference Gateways Market. These companies implement AI gateways to manage extensive workloads across multiple departments and locations, maximizing efficiency and productivity at scale. Their investment in AI infrastructure reflects a strategic approach to digitization and automation.

The scale of operations in large enterprises requires AI inference to be reliable, fast, and flexible, something that AI gateways enable. Smaller businesses often face challenges in adopting similar technologies due to cost and complexity, leaving large enterprises to lead innovation while benefiting from AI’s enhanced capabilities.

For Instance, in November 2025, HPE expanded its NVIDIA AI portfolio for secure enterprise deployments at scale. New offerings target large operations with gigascale factories using BlueField tech. It powers inference for complex, high-volume tasks reliably. Big enterprises get efficiency in power and network performance.

End-User Analysis

In 2024, The BFSI segment held a dominant market position, capturing a 38.6% share of the Global AI Inference Gateways Market. BFSI uses AI gateways to enhance fraud detection, regulatory compliance, and risk management while maintaining strict data privacy standards. This sector’s operational demands are driving heavy usage of inference gateways.

BFSI’s commitment to leveraging AI gateways also reflects the growing complexity of financial ecosystems. The need to process vast amounts of sensitive data swiftly and securely makes AI gateways indispensable. By deploying such technology, BFSI can innovate while ensuring robust protection against cyber threats and operational risks.

For Instance, in March 2025, Hugging Face integrated Cerebras Inference for developers serving BFSI models quickly. Users pick Cerebras on the platform for top-speed open-source inference. It covers models like Llama 3.3 70B with low latency. BFSI end-users tap this for secure, rapid financial insights.

Emerging trends

Emerging trends in AI inference gateways emphasize processing data closer to where it is generated to reduce delays, especially in environments like autonomous vehicles and smart manufacturing. This approach helps cut down on the time and bandwidth needed to send data back to centralized clouds. Many organizations today use AI extensively, with edge computing becoming a preferred method to improve responsiveness and efficiency in their AI services.

Another important trend is the increasing sophistication in directing AI requests through gateways, which choose the most suitable model or path for each specific task. This has led to improved performance and reliability. Additionally, AI inference gateways now often include features to monitor and log operations, supporting ongoing maintenance and optimization of AI models while cloud resources handle larger-scale analytics and data management.

Growth Factors

The expansion of AI inference gateways is fueled by the need for minimal delay in real-time applications found in sectors like entertainment, healthcare, and automotive technology. Advances in semiconductor technology and software optimizations are critical in enabling these gateways to handle demanding AI workloads efficiently.

Investments in generative AI have grown sharply, contributing to the development of more capable and responsive inference solutions. AI inference gateways also support integration across multiple data sources and systems, which is important for fields requiring immediate decision-making, like medical diagnostics and autonomous driving.

The desire for scalable solutions that do not demand excessive capital investments in hardware is pushing innovation in gateway design. At the same time, energy efficiency and sustainability are becoming key considerations in the deployment of AI infrastructure.

Key Market Segments

By Component

- Software

- Hardware

- Services

By Deployment Mode

- On-Premises

- Cloud

By Application

- Healthcare

- Finance

- Retail

- Manufacturing

- Automotive

- IT and Telecommunications

- Others

By Enterprise Size

- Small and Medium Enterprises

- Large Enterprises

By End-User

- BFSI

- Healthcare

- Retail and E-commerce

- Media and Entertainment

- Manufacturing

- IT and Telecommunications

- Others

Regional Analysis and Coverage

- North America

- US

- Canada

- Europe

- Germany

- France

- The UK

- Spain

- Italy

- Russia

- Netherlands

- Rest of Europe

- Asia Pacific

- China

- Japan

- South Korea

- India

- Australia

- Singapore

- Thailand

- Vietnam

- Rest of Latin America

- Latin America

- Brazil

- Mexico

- Rest of Latin America

- Middle East & Africa

- South Africa

- Saudi Arabia

- UAE

- Rest of MEA

Drivers

Growth in the AI inference gateways market is supported by increasing demand for fast and local processing of data. Many applications such as industrial control systems, automated inspection, retail monitoring, and connected devices require immediate responses. Sending data to distant servers introduces delay, so organizations prefer processing that happens close to the data source.

Another driver is rising concern about handling sensitive information. Many industries operate under strict privacy and data protection rules. When data is processed locally through inference gateways instead of being transmitted to remote servers, the risk of exposure is reduced. This approach is important in healthcare, public safety, and industrial sites where operators prefer to keep information on local systems.

For instance, in November 2025, Google Cloud launched GKE Inference Gateway, a tool that routes traffic smartly for AI models on Kubernetes clusters. It cuts latency and boosts throughput for real-world deployments in apps like chatbots and image analysis. Firms now run more models at scale without heavy rework.

Restraint

Despite the promise of AI inference gateways, hardware costs remain a key restraint. Building and running these systems often requires specialized processors, such as GPUs or AI accelerators, which raise capital expenditure significantly. Regulated sectors like finance, government, and defense tend to absorb these costs to retain control of sensitive workloads, but smaller organizations often struggle to justify such investments.

Rising component prices and limited availability further slow market penetration among startups and mid-tier firms. Additionally, on-premises infrastructure adds another layer of expense through maintenance, space, and power consumption. While some firms offset costs by using cloud-based inference, sectors with strict data protection policies prefer local setups for compliance reasons.

For instance, in December 2025, AWS debuted Trainium3 UltraServers and AI Factories with NVIDIA GB300, demanding heavy hardware for on-prem sovereign AI. Costs hit high for custom builds in regulated fields. Many firms weigh this against cloud fees long-term.

Opportunities

A strong opportunity exists in sectors looking to expand use of local AI processing. Industries such as smart manufacturing, logistics, surveillance, retail, and healthcare have growing needs for on-site analysis of video, sensor, and machine data. Gateways that simplify deployment of AI models and support consistent performance across different locations can attract interest from these sectors.

There is also opportunity in combining gateways with newer hardware designed specifically for inference tasks. Many manufacturers are releasing chips and small processors optimized for running trained models efficiently. Gateways that integrate smoothly with this hardware can help reduce operating costs and improve performance, creating a clearer path for organizations to scale local AI deployments.

For instance, in August 2025, Google Cloud tuned GKE for edge-style inference with 400 Gbps links and model-aware balancing. It serves dense workloads near data sources. This plays into 5G networks for quicker decisions in retail and autos.

Challenges

A key challenge is maintaining uniform performance across different sites and devices. Edge environments are not consistent. Some locations have stable power, strong connectivity, and controlled temperatures, while others do not. Ensuring that inference gateways perform reliably across all these conditions requires ongoing monitoring and adjustments. This adds effort for organizations managing large distributed systems.

Another challenge is updating AI models across many gateways. Once a model is deployed at the edge, it must be kept current with new versions, security updates, and performance improvements. Managing these updates across many devices can be complex, and mistakes may lead to inconsistent results or security risks. This operational difficulty may slow adoption in organizations without strong support systems.

For instance, in October 2025, IBM released AI agents on Oracle Fusion Marketplace, stressing compliance for enterprise inference. Health and finance users add layers for GDPR fits. Development slows as teams build in safeguards from the start.

Key Players Analysis

One of the leading players in October 2025, Akamai launched Inference Cloud, a distributed edge AI inference platform built with NVIDIA Blackwell infrastructure to deliver low-latency, real-time AI inference from core data centers to the edge. The platform integrates NVIDIA RTX PRO 6000 Blackwell GPUs, BlueField DPUs, and AI Enterprise software across Akamai’s global edge network of over 4,200 locations, with initial availability in 20 sites and plans for broader rollout.

Company Use case and Benefits for customers

Company Name Use Case Benefits for Customers NVIDIA Accelerating model inference on GPUs and specialized systems Faster responses, support for larger models, and better hardware utilization Google Hosting and scaling AI models via cloud inference services Easy deployment, global reach, and strong integration with data and tools Microsoft Serving models through cloud AI and AI gateway features Centralized control, enterprise security, and smooth app integration Amazon Web Services (AWS) Managed inference endpoints for a wide range of AI workloads Flexible scaling, pay-as-you-go pricing, and rich ecosystem integrations IBM Enterprise AI platforms with governed model serving Strong compliance, lifecycle management, and industry-specific solutions Meta (Facebook) Open models and tooling for large-scale inference Access to advanced models, community support, and rapid experimentation Alibaba Cloud Cloud-based AI inference for regional and global users Localized services, competitive pricing, and integration with commerce Tencent Cloud Industry-focused AI inference services in cloud and edge settings Tailored solutions, low-latency options, and regional data hosting Baidu AI cloud and edge inference for search, speech, and vision Optimized performance for language and vision tasks and strong China reach Oracle AI inference integrated with database and enterprise applications Tight data-AI linkage, security, and support for ERP and database stacks Intel CPUs and accelerators optimized for inference workloads Broad hardware options, energy efficiency, and edge-friendly solutions Hugging Face Model hub and hosted inference APIs Quick access to many models, simple APIs, and rapid prototyping Seldon Open-source model serving and MLOps for inference at scale Portability, Kubernetes-native control, and reduced vendor lock-in Cerebras Systems Specialized systems for large model inference High throughput, support for very large models, and shorter runtimes OctoML Automated optimization and deployment of inference workloads Lower latency, reduced cloud costs, and hardware flexibility Run:AI Orchestration of AI inference and training workloads on shared clusters Better GPU utilization, queue management, and cost control Verta Model catalog, deployment, and governance for inference Traceability, version control, and safer production rollouts DataRobot End-to-end AI platform with automated deployment for inference Faster time to value, no-code options, and managed monitoring Anyscale Distributed inference using Ray across clusters and clouds Horizontal scaling, resilience, and support for large concurrent loads Weights & Biases Monitoring and operations support for models in inference Experiment tracking, performance observability, and easier optimization Others Various cloud, chip, and software vendors supporting inference needs Niche capabilities, regional focus, and specialized vertical solutions Top Key Players in the Market

- NVIDIA

- Microsoft

- Amazon Web Services (AWS)

- IBM

- Meta (Facebook)

- Alibaba Cloud

- Tencent Cloud

- Baidu

- Oracle

- Intel

- Hugging Face

- Seldon

- Cerebras Systems

- OctoML

- Run:AI

- Verta

- DataRobot

- Anyscale

- Weights & Biases

- Others

Recent Developments

- In October 2025, Google Cloud rolled out GKE Inference Gateway, a smart tool that cuts latency and boosts throughput for AI models on Kubernetes with prefix-aware load balancing. This makes serving large language models way smoother and more cost-effective, especially for apps with repeat prompts. Teams can now handle bigger workloads without the usual headaches.

- In October 2025, Microsoft Azure boosted API Management’s AI Gateway for better backend handling, plus Arc-enabled AKS for hybrid AI from cloud to edge. Adds KAITO model serving and offline AI Foundry, making edge inference secure and scalable even when disconnected. Fits the push for anywhere AI ops.

Future Outlook with Opportunities

Gateways will advance toward full AI agent orchestration, discovering and composing model capabilities on the fly for complex workflows. This enables autonomous systems that chain inferences intelligently, opening doors in automation-heavy sectors.

Federated learning support grows next, allowing secure model updates across distributed sites without data movement. Opportunities abound in policy engines that enforce ethics and sustainability uniformly. As AI maturity rises, gateways evolve into observability hubs for bias detection and green computing. Adaptive platforms meeting these needs position well for sustained expansion in a multi-agent world.

Report Scope

Report Features Description Market Value (2024) USD 1.87 Bn Forecast Revenue (2034) USD 25.7 Bn CAGR(2025-2034)30 30% Base Year for Estimation 2024 Historic Period 2020-2023 Forecast Period 2025-2034 Report Coverage Revenue forecast, AI impact on Market trends, Share Insights, Company ranking, competitive landscape, Recent Developments, Market Dynamics and Emerging Trends Segments Covered By Component (Software, Hardware, Services), By Deployment Mode (On-Premises, Cloud), By Application (Healthcare, Finance, Retail, Manufacturing, Automotive, IT and Telecommunications, Others), By Enterprise Size (Small and Medium Enterprises, Large Enterprises), By End-User (BFSI, Healthcare, Retail and E-commerce, Media and Entertainment, Manufacturing, IT and Telecommunications, Others) Regional Analysis North America – US, Canada; Europe – Germany, France, The UK, Spain, Italy, Russia, Netherlands, Rest of Europe; Asia Pacific – China, Japan, South Korea, India, New Zealand, Singapore, Thailand, Vietnam, Rest of Latin America; Latin America – Brazil, Mexico, Rest of Latin America; Middle East & Africa – South Africa, Saudi Arabia, UAE, Rest of MEA Competitive Landscape NVIDIA, Google, Microsoft, Amazon Web Services (AWS), IBM, Meta (Facebook), Alibaba Cloud, Tencent Cloud, Baidu, Oracle, Intel, Hugging Face, Seldon, Cerebras Systems, OctoML, Run:AI, Verta, DataRobot, Anyscale, Weights & Biases, Others Customization Scope Customization for segments, region/country-level will be provided. Moreover, additional customization can be done based on the requirements. Purchase Options We have three license to opt for: Single User License, Multi-User License (Up to 5 Users), Corporate Use License (Unlimited User and Printable PDF)  AI Inference Gateways MarketPublished date: Dec. 2025add_shopping_cartBuy Now get_appDownload Sample

AI Inference Gateways MarketPublished date: Dec. 2025add_shopping_cartBuy Now get_appDownload Sample -

-

- NVIDIA

- Microsoft

- Amazon Web Services (AWS)

- IBM

- Meta (Facebook)

- Alibaba Cloud

- Tencent Cloud

- Baidu

- Oracle

- Intel

- Hugging Face

- Seldon

- Cerebras Systems

- OctoML

- Run:AI

- Verta

- DataRobot

- Anyscale

- Weights & Biases

- Others